10 Thought Experiments That Will Mess With Your Brain

By Robert Grimminck, Toptenz, 24 February 2016.

By Robert Grimminck, Toptenz, 24 February 2016.

Thought experiments are stories or analogies that attempt to shed light on an array of topics. Sometimes they are hypothetical situations to help us look at the world differently, or they show the paradoxes in human nature. Or they can help us visualize complicated concepts with the simplicity of a story. But no matter what they explain or illustrate, these thought experiments will hopefully mess with your mind, at least a little bit.

10. The Plank of Carneades

Let’s say you’re sailing on an old wooden ship and suddenly it catches fire. You jump overboard and there is another person in the water as well. You both look around the ruins of the ship, and the only thing you find that could save your life is a single plank of wood. The other person swims toward the plank, and he gets there before you do. The plank barely holds his weight, so you know that only one person will be able to stay afloat with the plank. When you get to the plank, you knock the person off of it and use it for yourself. The person who got the plank subsequently drowns. After you are rescued, you are charged with murder. At your trial, you claim you acted in self-defense. If you hadn’t acted the way you did, you would have died. What verdict will the jury come back with?

This thought experiment was first proposed by Carneades of Cyrene, who was born around 213/214 B.C., and it’s meant to show how complicated the difference between self-defense and murder can be.

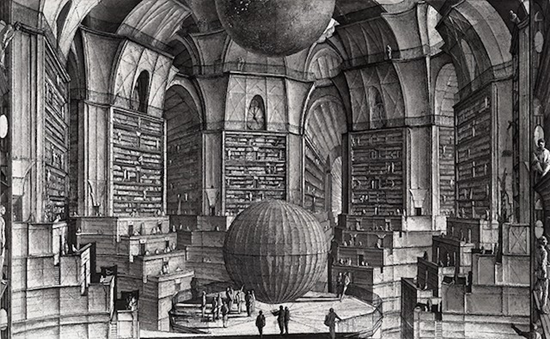

9. The Library of Babel

The multiverse theory is that there are an infinite number of universes running parallel to our own, and in those parallel universes, anything is possible. It is a bit mind-boggling to think about, but a visual thought experiment that will help you better picture it is Jorge Luis Borges’ short story “The Library of Babel.”

The library (or as Borges calls it, the universe) is made up of seemingly indefinite and possibly infinite number of hexagonal galleries. In the galleries, there are books and every book is different from each other, however slightly. Perhaps one comma is in a different place, for example, but no two books are identical. At the other end of the spectrum, some books are radically different. They are in different languages and the stories vary. Some don’t have even have stories, they are just nonsense, like a book that repeats “MVC” over and over again. One character in the book believes that the library contains every single combination of letters and punctuation marks. The question then arises, if there are only a finite number of languages, letters, and combinations, is there actually an end to the library or are there an infinite number of galleries?

8. The Two Generals Problem

Two sets of troops, and for the sake of simplicity we’ll call them the red troops and the blue troops, have surrounded an enemy city and they want to attack it from the north and the south at the same time. If they were to attack one at a time, they would be slaughtered, so they need to strike in one coordinated attack. So the red general sends a messenger to tell the blue general what time he plans to attack. The problem is that the red general won’t know if the blue general got the instructions. After all, there is a chance the messenger didn’t make it to see the blue general; he could have easily been killed or captured on his trek. So the only way the red general will know if the blue general got the message is for the blue general to send the messenger back to the red general confirming he got the message. So the messenger goes back to the red general to confirm the blue general got the message. But then the red general will have to send the messenger back to the blue general to acknowledge he got the message. And this could keep going back and forth an infinite amount of times, or at least until the city becomes wise to the plan and attacks both sets of troops.

This thought experiment is often taught in introduction to computers. It shows the problems and design challenges of trying to coordinate an action if you are using an unreliable link of communication.

7. The Famous Violinist

One morning you wake up in an unfamiliar place in an incredible amount of pain. That’s when you notice that you are lying back to back with someone. As you’re coming to, a strange man enters your field of vision and says that he is from the Society of Music Lovers. “The woman you are back to back with is a famous violinist and she was dying because of a kidney ailment.” The man says. “We looked over medical records and found that you were a match to save her life. We kidnapped you, and through surgery, we connected your kidneys. If you disconnect from each other, the violinist will die. But if you wait nine months, the violinist should be able to survive on her own.”

You are taken to the hospital and the staff say that it is a real shame what happened to you, and they would have stopped the surgery before it happened, had they known about it. But since the violinist was already attached to your back, and removing her would kill her, she’s now your responsibility for at least nine months.

Should you give up nine months of your life to support the violinist? Is it your responsibility? What if it wasn’t just nine months? What if the violinist was reliant on you the rest of your life? What if having her attached to your back shortened your life? What obligation, if any, do you have for the life of the violinist?

This thought experiment is the basis for the feminist essay “A Defense of Abortion” by Judith Jarvis Thomson. The bizarre scenario is meant to give a different perspective on the rights of women when it comes to abortion, especially in the cases of rape, or when the mother’s life would be shortened by going through with the pregnancy.

6. The Experience Machine

What is the meaning of life? Hedonism certainly makes sense in theory. Why wouldn’t we want to have the most pleasurable experiences in our life? Or at the very least, avoid situations that cause us pain? To put that theory to the test is the Experience Machine thought experiment.

You respond to a weird online ad for an unusual experiment at the local university. At the university you meet an eccentric neuroscientist who gives you an interesting offer. She says, “I have this machine, called the Experience Machine, and it can plug directly into your brain. It will manipulate your brain to make you think you are experiencing things, when you are simply floating in a tank. Everything will seem real, and you wouldn’t know you’re in a simulation. It will be indistinguishable from real life. The only difference is that I’ll only program pleasurable experiences into the machine. That means from the moment you plug in, you’ll experience the greatest things known to humankind and every single second in the machine will be completely and utterly joyful. The bad news is that there is no turning back, once you plug in, that will be your life. You will never be able to disconnect. So do you want to hook up and experience a simulated life full of joy and wonder? Or do you want to live your life that has its ups and downs, but it is real?”

So, what do you do? Do you connect to the machine and live in simulated hedonism or do you choose the real world?

Robert Nozick’s thought experiment asks a few thought provoking questions. One of the main things it questions is the nature of hedonism. Are people always looking for something that is pleasurable? Nozick argues that people will generally want the real thing over a simulated experience. Also, connecting to the machine would disconnect you from the real world, thus by hooking up to the machine, you’d be committing suicide. So, since people would choose real life that includes pain, suffering, and misery, over the Experience Machine, that would suggest people just don’t pursue hedonism.

5. The Spider in the Urinal

Thomas Nagel is a famed professor of Philosophy at New York University, and his thought experiment involves a spider in the washroom at the university. Every day when Nagel walked into the washroom, in one of the urinals there was a spider. After being urinated on, the spider would then use all its strength to not get swept away with the water when the urinal was flushed. It also doesn’t appear that the spider had any way to get out. Nagel thinks that this is a horrible and difficult life for the spider. So after a few days, Nagel decides he is going to help the spider. He goes to the washroom, gets a paper towel and places it in the urinal. The spider climbs on the paper towel and Nagel places the paper towel on the floor. Once on the floor, the spider doesn’t move from the paper towel, but Nagel walks away feeling good about himself for saving the spider. The next day, Nagel walks into the washroom and on the paper towel, in the same place he left it, is the spider and it is dead. After a few days, the paper towel and the dead spider are swept up and put in the garbage.

The Spider in the Urinal is meant to show the problem with altruism and that is, sometimes, doing something with the best intentions can still be devastatingly harmful. Also, you can never really fully understand what another person wants and that happiness and comfort mean different things to different people.

4. Swampman

It’s a dark and stormy night, and you’re walking through a swamp. Suddenly, you’re hit by lightning and you die. But through some miracle, in another part of the swamp, there is another lightning strike and it alters the molecules in the air to create an exact replica of you all the way down to the smallest part. This includes your memory. Another way of looking at it is, every time the Star Trek crew uses the transporter, they are killed and a clone is created in the new area.

The Swamp-Person would look and act just like you and no one would notice any difference, but is it actually you? Is Swamp-Person even a person? The author of the experiment, Donald Davidson, argues that the being wouldn’t be a real person because even though Swamp-Person will appear to recognize your friends and family, it is actually impossible for the Swamp-Person to recognize any of them because when he sees them it is for the first time. Since he is a new being, he didn’t cognize them in the first place. Secondly, he has no casual history, so when he talks about things, it isn’t genuine, he never learned about anything so his utterances would have no real meaning. It would just be empty sounds without true meaning.

3. Kavka’s Toxin Puzzle

One day you’re sitting in a coffee shop alone, just minding your own business, when an old man sits down at your table and places a vial in front of you. Without introducing himself, he explains he’s a very rich man and says “Do you want a million dollars?”

At least a little intrigued to hear his offer, you say “sure.” He says, “In this vial is a toxin that will leave you very sick and in a lot of pain for 24 hours, but it will have no lasting effect. I will give you the money if at midnight tonight, you intend to drink the toxin tomorrow afternoon. If you intend to do so, I will deposit the money in your bank account by 10:00 a.m.”

That’s when you notice the hole in his plan. “So you mean that I’ll have the money hours before I have to drink the toxin?”

“That’s right,” he says. “And you only need to intend to drink it. Actually drinking the toxin is not required to get and keep the money, you just need to prove your intention to do so.” He pushes over some legal papers and leaves the vial with you. He gets up from the table and he says he’ll see you at midnight.

You take the toxin home and your spouse, who is a chemist, examines the toxin and confirms it will cause you a lot of pain, but it won’t kill you. Next you have your daughter, a lawyer, check over the paperwork and everything is good to go. At midnight, all you have to do is intend to drink the potion the next afternoon and the money will be in your account at 10:00 a.m. and you do not have to drink the poison to get and/or keep the money.

Of course, since you aren’t forced to actually drink the toxin you may think, “I’ll just intend to drink it at midnight and then change my mind after the test.” But if that were the case, then you’d fail the test because, in the end, you weren’t intending to drink it. That’s when you realize how even a little doubt could disrupt the machine. Your son, who is a strategist for the Pentagon, suggests that in order to pass the test, simply make unbreakable plans to ensure you’ll drink it. Such as hiring a hit man to kill you if you don’t drink it, or signing a legal document that says you’ll give away all your money, including the million, if you don’t drink it. Your daughter looks over the contract and says tricks like that are not allowed. So as midnight rolls around you keep saying over and over again, you will drink the poison, and then the moment of truth comes, and what happens?

This thought experiment from moral and political philosopher Gregory S. Kavka is about the nature of intentions. You can’t intend to act if you have no reason to act, or at the very least, a reason not to act. So if you already have the money, there is no reason for you to actually drink the toxin, so you can’t have the intention at midnight. This leads to Kavka’s second point that you have to have a reason to intend to drink the toxin, but then the afternoon comes to drink it, you have no real reason to actually drink it.

2. The Survival Lottery

There are two patients in the hospital who are dying from organ failure. Jane needs a new heart, and John needs some new lungs. Neither of them abused their bodies, and their organ failure was just bad luck. Their doctors tell them that there are no donor organs available, so sadly John and Jane are going to die. Of course, John and Jane are upset, but they also point out that there are organs they can use; it is just that other people are using them. They argue that one person should be killed, because giving up one life to save two lives is clearly better. In fact, they start a campaign called the Survival Lottery. The lottery would be mandatory, so everyone will be given a random number. When at least two people need organs, there will be a draw from a group of suitable donors. The “winner” (to use the term very loosely) goes into the hospital, is killed, and then their organs are harvested and given to the maximum amount of people. They argue that the lottery is very utilitarian, because more people will benefit because two people who would have died were saved by one person’s sacrifice. Also, is it fair to let John and Jane die just because they are unlucky? Why is it fair that two people will die and one gets to live simply because of luck?

This lottery scenario is used as an examination of utilitarianism, which is the philosophy that the morally right solution is what benefits the greatest amount of people. But would it be for the greater good? Would the lottery cause too much terror, so that it wouldn’t be for the betterment of society? Or would people in the long run realize that the lottery actually benefits them? It also questions the difference between killing and inaction. Is one worse than the other?

1. Roko’s Basilisk

Often touted as the “most terrifying thought experiment ever,” Roko’s Basilisk is an unusual thought experiment that involves our fears about computers and artificial intelligence.

The thought experiment from the website Less Wrong involves two complicated theories. The first theory is called coherent extrapolated volition (CEV), which is essentially an artificial intelligence system that controls robots with the directive to make the world a better place. The second theory is within CEV and that is orthogonality thesis. This thesis is that an AI system can operate with any combination of intelligence and goal. That means it will undertake any task, no matter how big or small it is. And fixing the world’s problems will always be on-going, so CEV is open ended and so the AI system will always look for stuff to fix, because things can always get better. And because of orthogonality thesis it will tackle any problem.

This is where the problem arises, because the AI will not have human reasoning. It simply wants to make the world a better place as efficiently as possible. So, according to the AI the best thing humans could possibly do is help the AI come into existence as soon as possible. In order to motivate people with fear, the AI could retroactively punish people, like torture and kill them, for not making it come into existence sooner. What’s even more worrisome is that by just knowing that this potential AI system could exist, you could be in danger because you are not doing everything you can to help it come into existence. In fact, your life could just be a simulation created by the AI as research into the best way to punish you.

This leads to a complex dilemma. Do you work to help create this AI system to ensure you don’t get punished? Or do you just avoid helping, or even prevent the uprising which could lead to torture and death? All we can say is good luck!

No comments:

Post a Comment

Please adhere to proper blog etiquette when posting your comments. This blog owner will exercise his absolution discretion in allowing or rejecting any comments that are deemed seditious, defamatory, libelous, racist, vulgar, insulting, and other remarks that exhibit similar characteristics. If you insist on using anonymous comments, please write your name or other IDs at the end of your message.